Introduction and application of ORB-SLAM3

Short introduction to SLAM

In the ever changing world of technologies, researchers and developers always strive for progress in indefinite and unexplored areas. One such region that tackles the true definition of indefinite and unexplored - literally - is SLAM. SLAM (Simultaneous Localization And Mapping) is a method used for Simultaneously Mapping an unfamiliar area And Localizing the agent that is using SLAM in that same map. Two different methods of SLAM are used nowadays and those are visual SLAM (uses images acquired from cameras and other image sensors) and LiDar SLAM (uses a laser or a distance sensor). In this article, we focus on visual SLAM and, particularly, one of its solutions presented by Raúl Mur-Artal, Juan D. Tardós, J. M. M. Montiel and Dorian Gálvez-López, ORB-SLAM3 [1]. In addition to that, here you’ll find a short set-up instructions of ORB-SLAM3 library (version 1.0) alongside with our attached Docker file for easier managing of the installation; on the side, we also explain how to operate the ORB-SLAM3 library for implementing SLAM system and practical uses of provided files.

Image 1. ORB-SLAM3 map viewer window and point cloud with camera’s frames [2].

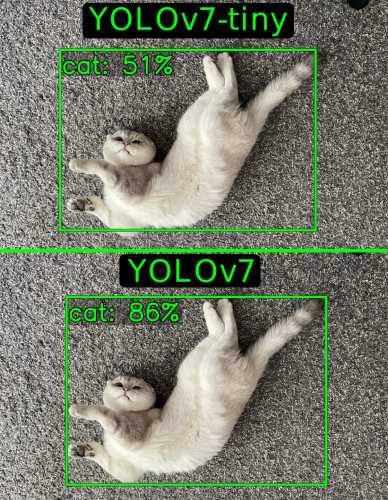

ORB-SLAM3 is a versatile and accurate visual sensor based SLAM solution for Monocular, Stereo and RGB-D cameras. It is able to compute in real-time the camera trajectory and a sparse 3D reconstruction of the scene in a wide variety of environments, ranging from small hand-held sequences of a desk to a car driven around several city blocks [2]. The newest version of ORB-SLAM is ORB-SLAM3 that is the first real-time SLAM library with an ability to perform Visual, Visual-Inertial and Multi-Map SLAM with monocular, stereo and RGB-D cameras, using pin-hole and fisheye lens models.

Image 2. System components of ORB-SLAM3 [1].

In comparison with the previous version, ORB-SLAM2, the latest version library provides an improvement in relocalization of itself even when the tracking is lost and there is poor visual information, also granting the robustness and accuracy [1, Table 1] proving to be one of the best in this field. After reading this article, you’ll have a baseline understanding of how to use the ORB-SLAM3 library efficiently and effectively.

Setup of ORB-SLAM3 V1.0

According to official installation instructions of the library, setting up the ORB-SLAM3 library is simple - you need to take care of dependencies and build the library itself. A short summary of those steps is provided below. If you would like to use Docker to install the dependencies and the library itself, you can skip to building via docker.

Dependencies

If you want to use an open source ORB-SLAM3 library (released under GPLv3 license), first of all you have to make sure that you have all of the code/library dependencies installed.

Necessities

All the prerequisites for a smooth usage of ORB-SLAM3 are listed in a GitHub repository above and, in a short version, below and should be taken care of before using the ORB-SLAM3 library.

Compiler: C++11 or C++0x. Pangolin: Used for visualization and user interface. Download and install instructions here. OpenCV: Used to manipulate images and features. Download and install instructions here. Eigen3: Required by g2o. Download and install instructions here. Python: Used to calculate the alignment of the trajectory with the ground truth. Required module - Numpy. ROS: Since the ORB-SLAM3 library has examples of processing input of monocular, monocular-inertial, stereo, stereo-inertial and RGB-D cameras using ROS, we recommend setting up ROS to be used as your base system for SLAM implementation.

Notice: check whether your base system (e.g. ROS Melodic) is compatible with the versions of the required libraries that are needed for ORB-SLAM3. If it is not (e.g. ROS Melodic is not compatible with the newest version of Pangolin), you either need to choose a different base system or checkout the right branch that contains a correct version for your base system before proceeding forwards (which, in ROS Melodic case, you would have to do).

Building ORB-SLAM3 library and examples via terminal

To build the library and examples, run this code below:

mkdir ORB_SLAM3

git clone https://github.com/UZ-SLAMLab/ORB_SLAM3.git ORB_SLAM3

cd ORB_SLAM3

chmod +x build.sh

./build.shNow the library file ORB_SLAM3.so should be in the lib folder and the executables of examples in the Examples folder.

All of the information about the different examples and how to use them can be found here.

Building ORB-SLAM3 library and examples via Docker

Docker containers are units of software that isolate applications from their environment, therefore, making it runnable virtually anywhere. That’s why you can use a docker container that would have dependencies and the library inside it and could also be used without any constraints in an environment of your choice. Below you can see our docker file that was used for setting up the ORB-SLAM3 library in the ROS Melodic base-environment.

FROM ros:melodic-perception

# install the required packages

RUN apt update && apt install -y build-essential

RUN apt update && apt install -y cmake git libgtk2.0-dev pkg-config libavcodec-dev libavformat-dev libswscale-dev

RUN apt update && apt install -y python-dev python-numpy libtbb2 libtbb-dev libjpeg-dev libpng-dev libtiff-dev libdc1394-22-dev

RUN apt update && apt install -y python3 python3-pip

RUN apt update && apt install -y git

RUN apt update && apt install -y libboost-all-dev

RUN apt update && apt install -y libomp-dev

RUN apt update && apt install -y libeigen3-dev

RUN apt update && apt install -y libgl1-mesa-dev

RUN apt update && apt install -y libglew-dev

RUN apt update && apt install -y pkg-config

RUN apt update && apt install -y libegl1-mesa-dev libwayland-dev libxkbcommon-dev wayland-protocols

RUN apt update && apt install -y libssl-dev

RUN apt update && apt install -y libcanberra-gtk-module libcanberra-gtk3-module

RUN apt update && apt install -y libgnutls28-dev

RUN apt update && apt install -y ros-melodic-usb-cam

RUN apt update && apt install -y ros-melodic-realsense2-camera

RUN apt update && apt install -y ros-melodic-sophus

# build pangolin

WORKDIR /

RUN mkdir pangolin

RUN git clone https://github.com/stevenlovegrove/Pangolin.git /pangolin

WORKDIR /pangolin

RUN git checkout v0.6

RUN mkdir build

WORKDIR /pangolin/build

RUN cmake ..

RUN cmake –build .

# install and build ORB_SLAM3

ADD . /ORB_SLAM3/

RUN git clone https://github.com/UZ-SLAMLab/ORB_SLAM3.git ORB_SLAM3

WORKDIR /ORB_SLAM3

RUN chmod +x build.sh

RUN ./build.sh

# extract vocabulary

WORKDIR /ORB_SLAM3/Vocabulary

RUN tar -xf ORBvoc.txt.tar.gzOnce you’ve created a Docker file inside the ORB_SLAM3 directory, you can run those commands below to build the container:

cd ORB_SLAM3

docker build --tag orb-slam:1.0 .

docker create --name orb-slam orb-slam:1.0Now you should be able to use this orb-slam container by setting the base image to be taken from it in a new one, e.g., your ROS wrapper. To do so, you need to add this mandatory line of code to any new containers that would need to use the ORB-SLAM3 library:

FROM orb-slam:1.0By doing this, you initialize a new build stage and set the base orb-slam image for subsequent instructions. Since the ORB-SLAM3 library is not likely to ever change, it’s a good option to have it as a separate container for its portability.

Usage of ORB-SLAM3

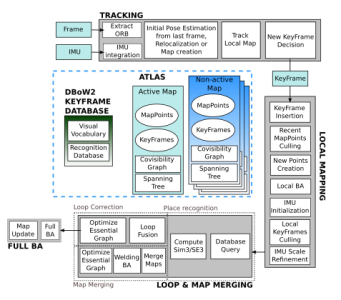

Since we focus on the ORB-SLAM3 library used for visual sensor based applications, the best option is to use a camera. One type of camera that can be used is a mono camera, however, it has a certainly poorer accuracy than a stereo camera and SLAM initialization takes a longer time. A stereo camera, on the other hand, provides us with a perception of depth. By processing images received from a stereo camera we are able to not only obtain the distance to different objects, but also perceive the world in the images in three dimensions, which solves a problem of determining the scale of the environment. This is important for such libraries as ORB-SLAM3 to be able to save images and extract features for later recognition and comparison in three dimensional point clouds and produce maps that match those in the real world in terms of scale. A mono camera could be used to try and achieve that while utilizing a different additional tool, e.g. IMU, but the scale of the environment would never be as precise as when using a stereo camera. If you choose a camera that publishes already preprocessed rectified images (we have used Intel RealSense depth camera D435i), it saves you lines of code and time since you don’t have to process those images yourself. If the camera of your choice does not provide rectified images, you will need to rectify the images manually.

Image 3. Difference between raw and rectified cropped images [3].

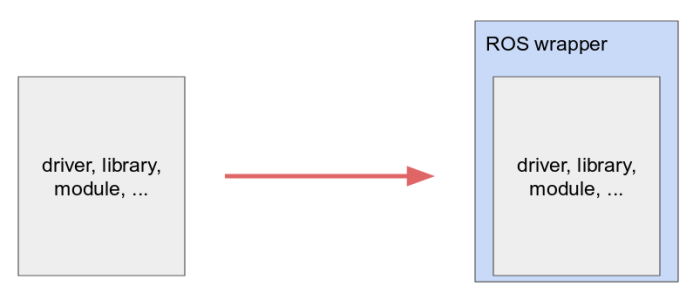

In order to actually use ORB-SLAM3, you would also need to have a program of some sort that uses the library just like in each of those examples. There’s also a possibility to use a ROS Wrapper (a ROS wrapper is simply a node that you create on top of a piece of (non-ROS) code, in order to create ROS interfaces for this code). There are some examples to get inspiration from: wrappers for ORB-SLAM2 (these should also work with the newest ORB-SLAM3 library version) and wrappers for ORB-SLAM3.

Image 4. ROS wrapper [6].

If you choose to integrate the ORB-SLAM3 library into your system that already uses ROS, you can create a previously mentioned ROS wrapper and combine ROS functionality with ORB-SLAM3 as a separate subsystem that you can fully control. The reason we see value in using a ROS wrapper for ORB-SLAM3 library is that you can have an interface that communicates with ORB-SLAM3 and other parts in the system on the go, as you are also using functionalities provided by ROS. For example, with just a few lines of code you can create services for loading and saving maps, control the SLAM system from any existing device in the system, and those are just several examples of what kind of practicality is offered by a ROS interface over ORB-SLAM3 library.

Mapping and localization

When creating an ORB-SLAM3 system object, you can choose whether you want to create a new map or use a premade one. Initially, when an ORB-SLAM3 object is created, one of the parameters passed to its initialization function is a .yaml file. This file, besides the parameters of the camera, should contain directories of the atlas .osa files. The atlas files with .osa extension are binary files that keep the information about all the maps that have been created and those maps itself.

When creating a new atlas, this line must be added to the end of your .yaml file:

// File directory

System.SaveAtlasToFile: "./yourdirectory/atlas" When using an old atlas (with the possibility to save it, probably changed, later), those lines must be added to the end of your .yaml file:

System.LoadAtlasFromFile: "./yourdirectory/atlas"

System.SaveAtlasToFile: "./yourdirectory/atlas"The directories in the lines shown above can be different, file names can also be different. The library uses directory written to LoadAtlasFromFile to use the atlas file from, and SaveAtlasToFile directory to save the atlas file to. Once the agent has moved following a path and you’re ready to save your new or adjusted map, it can be done by invoking a function Shutdown, as shown below:

slam.Shutdown(); This function carries out the shutdown of the system that uses the library and also saves the atlas file. The atlas file can be saved in text and binary formats. A text file is, of course, better understandable for the human eye, however, since ORB-SLAM3 library stores all the information using the serialization part of the Boost library, in an Archive manner, the .osa file is still just a sequence of bytes that represent a serialized atlas. So not only does a text type of a file make it eat up memory, a serialized atlas has its various parts gathered from different sections of a library and in that way a serialized map is a complex object, so disentangling from where a particular part of the file was taken is a superfluous work.

Nevertheless, a binary .osa file is a must if you ever need to use it for localization or navigation in beforehand created maps. Since, as previously mentioned, it holds information about the atlas and a keyframe database, without this file, you wouldn’t be able to, in any way, tell the ORB-SLAM3 library of your created maps and walked paths, so we recommend saving a binary .osa file for probable future use. In addition to the atlas file, the library provides another great feature - actual human readable text files. The difference between the atlas and those text files is that inside them there are only coordinates of the camera frames and the timestamps of when the coordinates were saved, without all the additional information that is left in the atlas file. Consequently, those trajectory files are perfect for self-made manual path planning, which then can be used for various types (text, speech, etc.) of navigation. However, they are not mandatory for the ORB-SLAM3 library, we recommend saving those only if you’re planning to complement your system with parts that would make use of those files.

The trajectory containing files can be saved in three formats - KITTI, EuRoC, TUM. Examples of visual - inertial datasets can be found here in EuRoC and TUM formats. In our system’s implementation we’ve used TUM format to save coordinates.

Image 5. An example of a file in TUM format.

Previously mentioned files can also hold two types of information. First, you can save the coordinates of the keyframes, which means, not every coordinate of where your object that uses a system with SLAM was moving, but every 10 - 20 frames or so, depending on the environment and your movements. And second, you can have coordinates of all the camera’s taken frames. Be wary about the size of the files - if the map you want to save has been recorded for a long time, the increase in frames will be enormous in comparison to only collecting the coordinates of the keyframes, so it would be practical to save only keyframes’ coordinates instead. The files can be saved using functions for collecting coordinates of frames and keyframes, respectively, SaveFrameTrajectory+[format name] and SaveKeyFrameTrajectory+[format name] and those must be called before shutting down the system (you don’t have to shut down the system afterwards, saving keyframe coordinates and continuing on your path is great for path saving in-between stops, however, you cannot save keyframe coordinates after the system was shutdown).

// Save camera trajectory

slam.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectoryTUMFormat.txt");

slam.SaveTrajectoryTUM("FrameTrajectoryTUMFormat.txt");

slam.SaveTrajectoryKITTI("FrameTrajectoryKITTIFormat.txt");Above you can see previously mentioned functions for saving keyframes and frames in TUM and KITTI formats. The string passed to the function when invoking must contain the full path to file’s directory + file’s name, or, in this case, if you want to save the file in the working directory - only file’s name is mandatory.

But first and foremost, in order to save files, we need to have something to put into those files. After the agent starts moving along a path while creating a new map or using a pre-saved one, part of the SLAM technique is localization. The ORB-SLAM3 library constantly checks similarities between extracted features from previous and current frames and executes localization in the map. If you start the system by creating a new map, the agent can just start moving down the path that later should be saved. On the other hand, if you choose to load an old atlas file to the system and it is done successfully, the agent needs to wait for relocalization before moving forwards. We can make sure that the atlas was loaded successfully via a terminal output message “End to load the save binary file”, as shown in Image 6.

Image 6. Terminal output while loading map file.

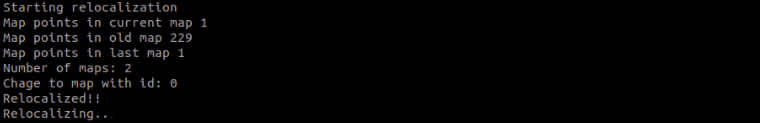

Thereafter, a new map is created on top of an old one (“Map id:2” - not 0, that would be the first map in the system). That is done so that the new information, from the present, can be written separately without adjusting an old map. Once the agent rotates left and right mildly, the system recognizes the already seen surroundings and it relocalizes the object that is using a system with SLAM in an old map.

Image 7. Terminal output of relocalization.

As you can see above, if the relocalization is successful, the agent is placed on an old map - a newly created map still exists, however, an active map changes and now an old map becomes the active one. The agent can now move and be localized in the old map.

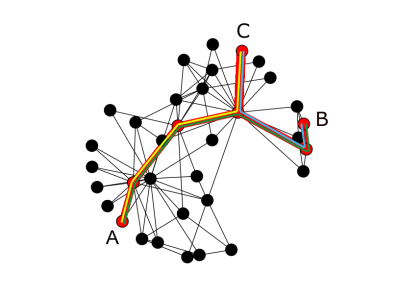

Besides localization, another important feature is merging of maps. Let’s say, you want to go from point A to point B and save it to a map, and then you would like to go from point A to point C and also save it to a map (see Image 8). There are a few ways you can do this. First, you can create two different maps for both paths, implement a part of a system that provides a solution of choosing which path you’ll want to navigate in, and load a different map everytime when moving in paths A-B or A-C even though you can clearly see that a long part of both maps is the same - from point A up to the intersection.

Image 8. Intersection of paths.

A second solution you can take is to move in a way that ORB-SLAM3 library is able to merge paths. Once the system is sure that you have moved in this path before, it merges the maps, thus saving a large amount of points, features and other information that would be usually saved by reserving only the new information from unseen images. Consequently, if you wanted to record a map that would hold paths from A to B and from A to C (and even from B to C!), all you would need to do is move from point A to either B or C, slowly turn around and move towards the other one, and then back to point A (shown in the image below).

Image 9. Moving instructions alongside a path for a successful merging of maps.

As mentioned previously, to move from A to B - green line, from B to C - blue line, from C to A - yellow line. What should be saved, if the maps are correctly merged, is a red path, just like in the image no. 8.

Image 10. Terminal output when possibility to merge is detected.

As you can see, besides the additional map creations that we have seen while testing the system, once ORB-SLAM3 recognised the latest image as the one that was already seen, the possibility to merge maps was detected and it was performed successfully. When maps are merged, the recent map or maps are deleted - the only remaining map is the one to which the recent map or maps are merged to.

Application of ORB-SLAM3

The ORB-SLAM3 library and the SLAM technology itself can be practically used for planning routes. Since we use a camera, we can apply the visual information we get from our surroundings to detect obstacles which is great for planning trajectories because we know that the path which was planned from the saved map won’t have irremovable hindrances. A subsystem that handles manual selection of start and goal positions in the path can be implemented; in addition, as mentioned in a subsection “Mapping and localization”, the frames coordinates files can be used for all aforementioned things and also for various types (text, speech, etc.) of navigation.

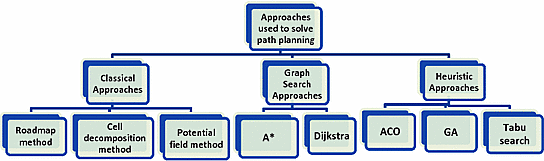

Creating a program that plans a path requires a few steps: to have a file with coordinates in it, which you can save following the instructions in a subsection “Mapping and localization”, and a little bit of imagination. You can use all sorts of tools for path planning - save maps into graphs, use algorithms, created specifically for planning trajectories between nodes (Dijkstra, A* and many more) and so on, so forth.

Image 11. Types of algorithms for path planning [5].

It is important to consider the saving of significant points in the map that you are creating while moving the object that uses a system with SLAM (e.g. the start and end coordinates of a route that you’ll want to use later on) - the library does not provide any method for saving those, so additional implementation of accumulating the key coordinates should be taken care of.

Image 12. Shortest Path in a graph using NetworkX library [4].

Since we also have frames’ coordinates files that are produced from the path which was taken while creating the map, a subsystem of navigation can also be created. There are distinct methods for accomplishing a goal of being able to navigate in a path using some sort of commands, starting from Finite State Machines and systems, based on Linear Temporal Logic, to Model Predictive Control. It all comes down to choosing the method that best fits the complexity of your system.

Limitations

While testing ORB-SLAM3, we noticed that the whole system is sensitive to extracted images that have too few of the distinct features in them. If you walk in a narrow corridor and slide across a white wall with less than enough features for the system to track your location - a new map will be created (the system creates a new map every time it cannot perform localization for a short period of time). Then the maps can be merged if the system is able to relocalize but the system can also stay on the same map and, as follows, you will be creating a new map even if you wanted to move in and save information of the old map, thus you’d have to manually activate an old map and ORB-SLAM3 then should be able to relocalize you in it.

When creating a new map, we observed that if the surroundings of the object that uses a system with SLAM change rapidly (especially if there are people passing by in front of the camera), the position of the object, after ORB-SLAM3 performs relocalization, is a little bit moved in an unknown direction. That doesn’t make any hindrances while the map is being recorded, however, if such a map is to be used later for some sort of navigation and if ORB-SLAM3 is not able to merge the maps and perform loop closing (the action that reconfigures the coordinates of the frames, “closing the loop” as if you were moving from the start and ended up at the same point) before saving the map, once the binary file is loaded, the actual coordinates of the saved frames in the map might be inaccurate - as a consequence, using this kind of map, especially if it is a large and complex one, is impossible. You can solve this issue by performing constant monitoring of the coordinates of the object to see if it somehow deviates from the path it is supposed to be continuing on and if that happens, remake the atlas file by restarting the system.

Tips and tricks on smoother experience while using ORB-SLAM3

Carefully check requirements (libraries, dependencies, required and correct versions, etc.) before installing ORB-SLAM3 library. As we have built, checked and tested our system, along the way we have noticed changes in libraries such as new versions were released and thus we had to do modifications for our system in terms of compatibility. Since we cannot prevent that, we can only ensure we have everything prepared before the actual usage of the library as afterwards we have to only maintain the compatibility aspect and can focus on implementing other parts of the system.

Make sure to keep track of how you move when using ORB-SLAM3 ORB-SLAM3 is based on tracking features in frames that are extracted from the camera. There is a certain number of features (such as dots on a conspicuous painting that ORB-SLAM3 can “remember” as a feature) in one frame that has to be met in order for ORB-SLAM3 to (re)localize correctly. So you have to make sure to not make turns in monotonic places, so that there are actual features to keep track of, and also to turn slowly and steadily, same goes while moving alongside a path. When moving in a straight line, try not to shake the camera too much and move forwards slowly and steadily as well to not let ORB-SLAM3 lose its tracking ability.

A short glossary of useful functions provided by the library

slam.SaveKeyFrameTrajectoryTUM("KeyFrameTrajectoryTUMFormat.txt"); // keyframes, TUM format slam.SaveTrajectoryTUM("FrameTrajectoryTUMFormat.txt"); // frames, TUM format int state = slam.GetTrackingState(); System::ActivateLocalizationMode(); System::DeactivateLocalizationMode(); System::Shutdown();The functions that might need a little bit of explanation are GetTrackingState, ActivateLocalizationMode, DeactivateLocalizationMode and Shutdown. The first one, GetTrackingState, provides one of the answers: Tracking :: SYSTEM_NOT_READY or NO_IMAGES_YET, NOT_INITIALIZED, RECENTLY_LOST, LOST or OK. Knowing the tracking state provides a possibility to implement autonomous actions that need to be taken when, e.g. the tracking state was recently lost (you can wait for a bit for ORB-SLAM3 to try and (re)localize you) or if everything is going well (“Tracking::OK”), continue. Localization mode activation and deactivation functions are used by the library on its own, however, if you wanted to manually activate or deactivate localization mode, for example, to be able to turn in place without ORB-SLAM3 losing track or for ORB-SLAM3 to not localize you for a part of the road you move in, you can use those. Invoking the Shutdown function saves the atlas file in binary mode and then deallocates the SLAM system. Make sure to save the frames coordinates if needed using the first two functions in the glossary before shutting a system that uses ORB-SLAM3 down.

And here you have it, we’ve reviewed all the key features that ORB-SLAM3 library has to offer, including its usage and tips and tricks on how to get a more productive outcome.

Bibliography

[1] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. Montiel and J. D. Tardós, "ORB-SLAM3: An accurate open-source library for visual visual-inertial and multi-map SLAM", IEEE Trans. Robot. [2] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. Montiel and J. D. Tardós, ORB-SLAM Project Webpage [3] S. A. R. Florez, "Contributions by Vision Systems to Multi-sensor Object Localization and Tracking for Intelligent Vehicles" [4] The shortest path in a networkx graph [5] A. Koubaa, H. Bennaceur, I. Chaari, S. Trigui, A. Ammar, M. F. Sriti, M. Alajlan, O. Cheikhrouhou, Y. Javed, "Background on Artificial Intelligence Algorithms for Global Path Planning" [6] The Robotics Back-end, "Create a ROS Driver Package – Introduction & What is a ROS Wrapper" [article]

Other insights