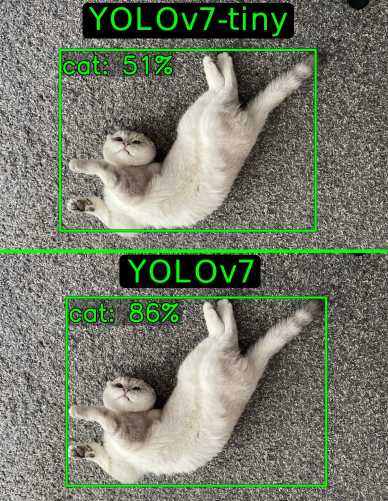

YOLOv7 vs YOLOv7-tiny

YOLO introduction

YOLO is an acronym for "You Only Look Once". It describes an open-source algorithm that uses a neural network for real-time object detection. The algorithm works by taking an image and outputting the object's classification, its confidence, and bounding box. All of the operations are performed in a single iteration, unlike other detection algorithms, which need to iterate multiple times over the same image, for example, algorithms that use Region Proposal Networks. In this article, we compare the detection quality of two types of YOLOv7, which is currently the latest, fastest, and most accurate iteration of the YOLO line. More information on the inner workings of YOLOv7 here.

For the comparison, we chose YOLOv7 and YOLOv7-tiny because YOLOv7 is capable of being run on lightweight hardware (e.g. Jetson) but not at a high frame rate. Our tests help to choose the worthiness of sacrificing detection quality over speed. Different environments and distances were used to find out the shortcomings of YOLOv7-tiny.

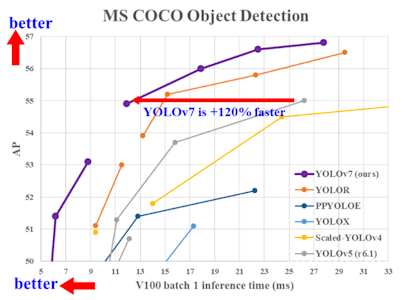

Image 1. YOLO model comparison. x-axis - inference time, y-axis - accuracy [1].

Test setup

Testing was done with a Luxonis Depthai OAK-D Lite camera [2], detection with Python YOLOv7_package (version 0.0.11) with pretrained models from PyPI [3], and ROS2 Humble [4] was used for data communication.

Table 1. Depthai OAK-D lite monochrome camera's specifications.

DFOV / HFOV / VFOV | 86° / 73° / 58° |

Resolution | 480P (640x480) |

Effective Focal Length | 1/7.5 inch |

Pixel size | 1.3mm |

Lens size | 3µm x 3µm |

Detection quality comparison

Light setting

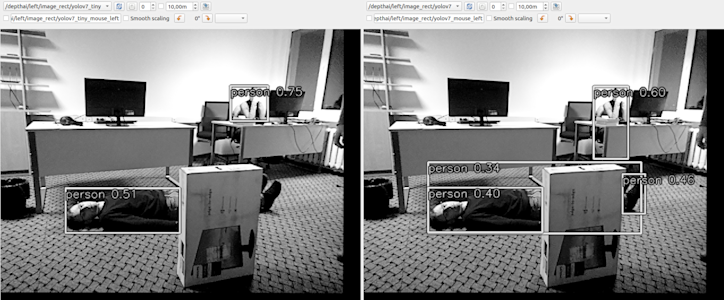

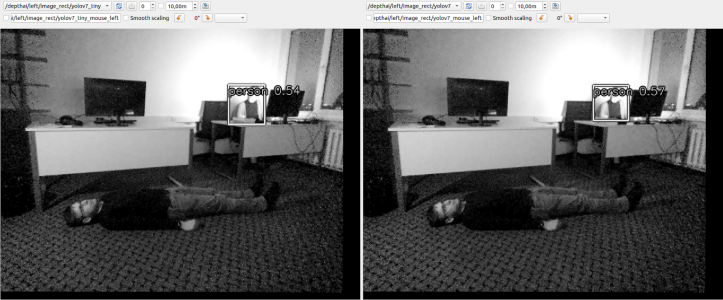

Detecting a lying person in a well-lit environment was accurate for YOLOv7, scoring above 80% confidence in most of the tests, with some deviations to around 75% in some settings. YOLOv7-tiny scores from 50% to 89%, depending on the number of obstructions used.

Image 2. A well-lit room. Both algorithms (YOLOv7 and YOLOv7-tiny) detect the lying and sitting person.

With obstruction

Image 3. A well-lit room with obstructions. YOLOv7-tiny detects both people, but feet are not detected. YOLOv7 detects everything.

Image 4. A well-lit room with obstructions. Both algorithms (YOLOv7 and YOLOv7-tiny) detect both people.

Image 5. A well-lit room with obstructions. Both algorithms (YOLOv7 and YOLOv7-tiny) detect all 3 people with varying confidence.

Image 6. A well-lit room with obstructions. Both algorithms (YOLOv7 and YOLOv7-tiny) detect both people.

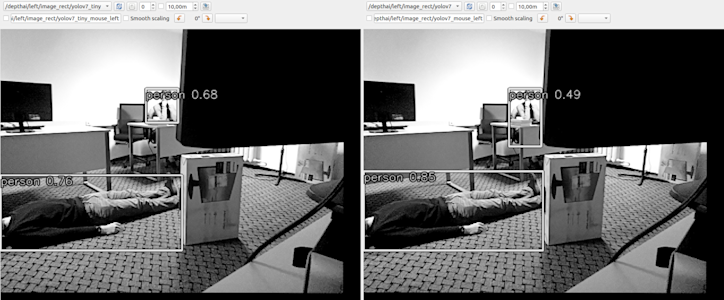

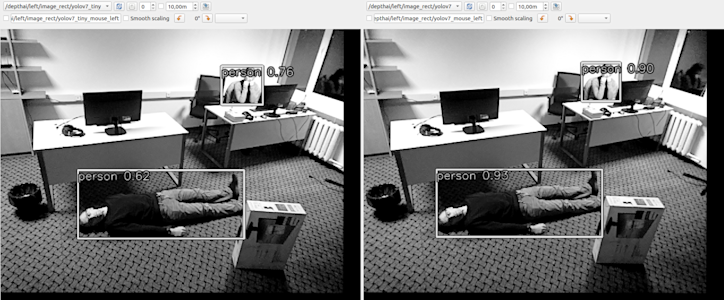

From a higher position

Image 7. A well-lit room. Both algorithms (YOLOv7 and YOLOv7-tiny) detect both people.

Image 8. A well-lit room. Both algorithms (YOLOv7 and YOLOv7-tiny) detect both people. YOLOv7 has better confidence.

Image 9. A well-lit room. YOLOv7 detects both people. YOLOv7-tiny detects a reflection as a person and fails to detect the lying person.

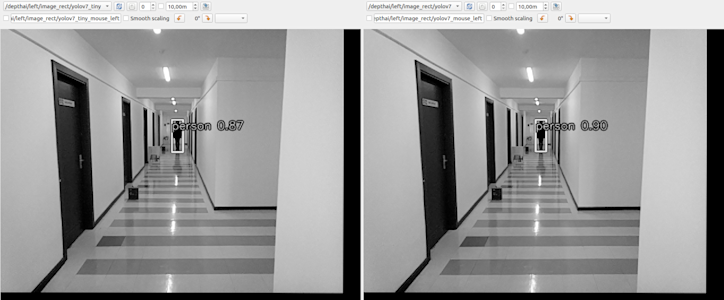

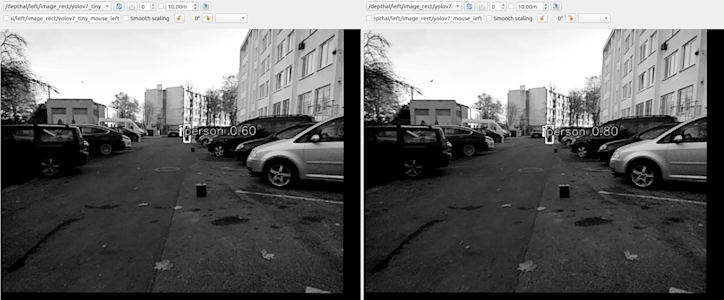

Good lighting, hallway, measuring distances

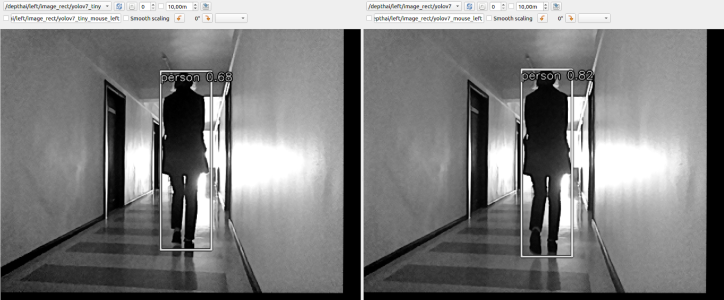

YOLOv7-tiny was worse at detecting parts of a person on the edge of the camera's field of view and at a distance of about 20 meters.

Image 10. Hallway with good lighting. 20-meter distance. YOLOv7-tiny confidence was much lower than YOLOv7.

Image 11. Hallway with good lighting. 15-meter distance. YOLOv7 and YOLOv7-tiny produce similar results.

Image 12. Hallway with good lighting. 10-meter distance. YOLOv7 and YOLOv7-tiny produce similar results.

Image 13. Hallway with good lighting. Person next to the camera. YOLOv7-tiny fails to detect a part of the person.

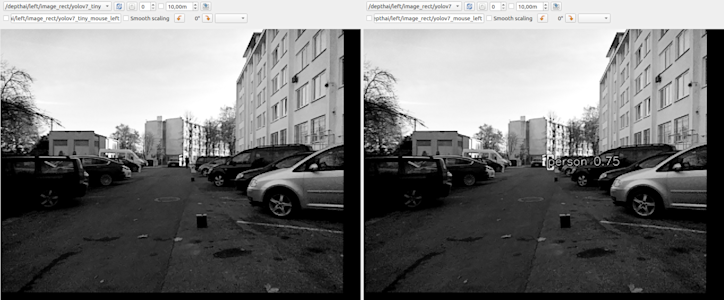

Light setting (shadow), outside, measuring distances

YOLOv7-tiny accuracy starts falling at distances further than about 20 meters, and it was unable to reliably detect further than 30 meters. At 15 meters, YOLOv7 and YOLOv7-tiny detection accuracy has negligible differences.

Image 14. Outside, in a shadow. 35-meter distance.

Image 15. Outside, in a shadow. 25-meter distance.

Image 16. Outside, in a shadow. 25-meter distance, moving to the side.

Image 17. Outside, in a shadow. 15-meter distance.

Image 18. Outside, in a shadow. 5-meter distance.

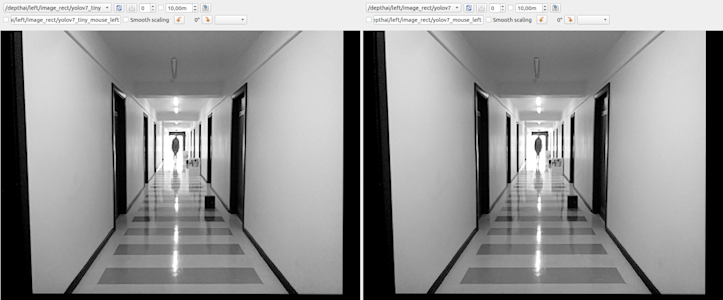

Good lighting, backlight hallway

Image 19. Hallway with good lighting, backlit. 20-meter distance. YOLOv7 and YOLOv7-tiny fail to detect the person.

Image 20. Hallway with good lighting, backlit. 10-meter distance. YOLOv7 and YOLOv7-tiny produce similar results.

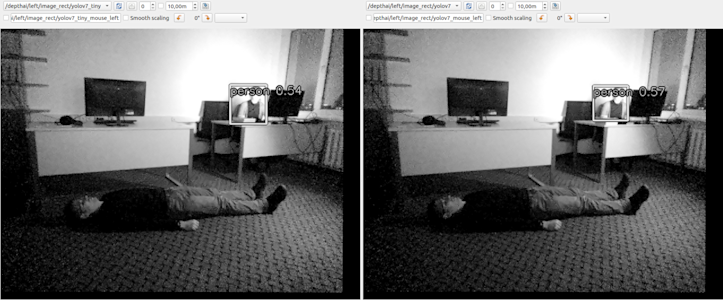

Dark setting

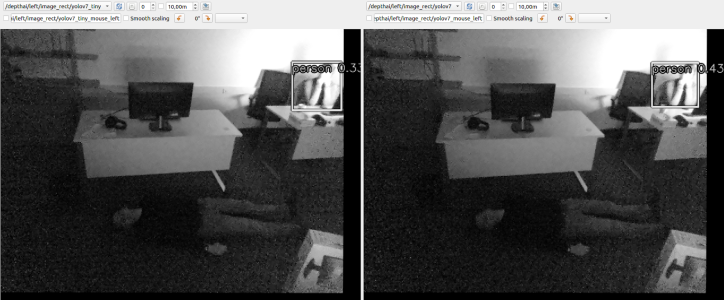

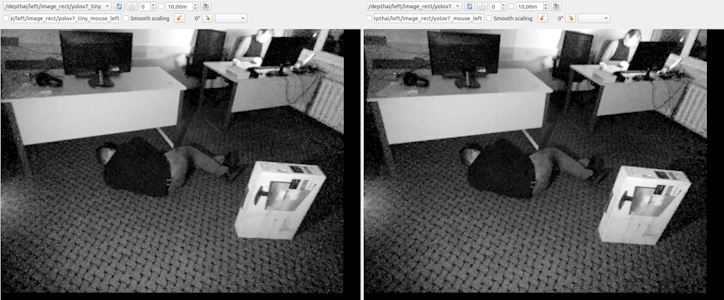

Detection accuracy falls drastically in the dark. YOLOv7 was noticeably better at detecting objects in darkness than YOLOv7-tiny. The lying person was rarely detected, while the sitting person was detected either at low accuracy or every few frames, depending on conditions like camera position, lighting, and obstructions. Some positions are easier to detect than others.

Image 21. Low light room. YOLOv7 detects both standing people, YOLOv7-tiny detects only one person, and neither detects the lying person.

Image 22. Low light room. YOLOv7 and YOLOv7-tiny detect only one person, while neither detects the lying person.

Image 23. Low light room. YOLOv7 detects sitting and lying persons (with low confidence), but YOLOv7-tiny does not detect anything.

Image 24. Low light room. YOLOv7 and YOLOv7-tiny do not detect the lying person.

From a higher position

Image 25. Low light room. YOLOv7 and YOLOv7-tiny do not detect anything.

Image 26. Low light room. YOLOv7 and YOLOv7-tiny do not detect the lying person while detecting the sitting person.

Image 27. Low light room. YOLOv7-tiny does not detect anything, while YOLOv7 detects the lying person with low confidence and mistook a monitor for a person.

Image 28. Low light room. Both algorithms (YOLOv7 and YOLOv7-tiny) detect both people because they are upright.

Image 29. Low light room. Both algorithms (YOLOv7 and YOLOv7-tiny) do not detect either person.

Image 30. Low light room. YOLOv7-tiny does not detect anything while YOLOv7 detects the lying person.

Image 31. Low light room. The standing person was detected by both, YOLOv7 and YOLOv7-tiny, but the latter has low confidence.

Image 32. Low light room. YOLOv7-tiny detects a person behind the table (at low confidence), but YOLOv7 cannot.

Poor lighting, backlit hallway

If the camera has poor dynamic range, backlit settings can wash out the detection object and lose accuracy, which was fixed by having more even lighting. We can see that YOLOv7 performs better in these conditions than YOLOv7-tiny.

Image 33. Backlit hallway with poor lighting. Distance of 2.5 meters. While the person was close, YOLOv7-tiny confidence was lower than YOLOv7.

Image 34. Backlit hallway with poor lighting. Distance of 7.5 meters. As the person was walking away, YOLOv7-tiny lost the detection sooner than YOLOv7.

Image 35. Backlit hallway with poor lighting. At about a distance of 10 meters, the person was not detected.

Movement

Movement increases motion blur, which decreases detection confidence. Having more light (or a better camera) reduces motion blur.

Image 36. Movement, well-lit room. Both algorithms (YOLOv7 and YOLOv7-tiny) do not detect the lying person.

Image 37. Movement, low light room. Both algorithms (YOLOv7 and YOLOv7-tiny) do not detect anything.

Speed and hardware

Table 2. Performance on different hardware.

Lenovo Legion 5 | Jetson Xavier NX | |

|---|---|---|

Power mode | High-performance | Custom 20W power mode for maximum performance |

GPU | GeForce RTX 3050 Mobile Memory 4 GB GDDR6 @ 1.5 GHz 2048 cores @ 0.7 GHz, up to 1.1 GHz | NVIDIA Volta 384 cores @ 1.1 GHz 48 tensor cores |

CPU | AMD Ryzen 5 5600H 6 cores, 3.3 GHz, up to 4.2 GHz 12 threads | NVIDIA Carmel Arm v8.2 6 cores, 1.9 GHz |

RAM | DDR4 2x8 GB @ 3200 MHz | LPDDR4x 16 GB @ 1866 MHz |

YOLOv7 | 33 FPS | 12 FPS (GPU bottleneck) |

YOLOv7-tiny | 67 FPS | 26 FPS (CPU bottlenec |

Summary

YOLOv7-tiny detected faster and was less resource-intensive at the cost of accuracy when compared to YOLOv7. While using the laptop, both videos were running at 30 FPS (limited by the camera), but the detection itself for YOLOv7-tiny took 0.015 ms or 67 FPS, and for YOLOv7, the detection took 0.030 ms or 33 FPS. Tests were also performed on a Jetson Xavier NX: YOLOv7 was limited by its GPU performance (GPU utilization was at 100%) running at 12 FPS, YOLOv7-tiny was limited by its CPU performance (GPU utilization was around 50% while a CPU core was at 100%) running at 26 FPS (having a faster reaction time).

Dim environments decrease the camera's shutter speed and increase ISO, which accordingly increase motion blur and image noise, making both algorithms unsatisfactory for detecting a lying or fallen person (even if something was detectable, YOLOv7-tiny had worse confidence). Good lighting conditions (or better cameras) are necessary for better accuracy, especially while camera movement is involved.

In a well-lit environment, detection confidence was lower for someone lying down when compared to being upright.

In an ideal environment (good lighting, distance between 2-20 meters, upright person), both detection algorithms have a similar detection accuracy.

In a harsh environment (low light, too close or further distances, lying person), YOLOv7 outperforms YOLOv7-tiny.

Given good lighting conditions and distances of less than 20 meters, YOLOv7-tiny could be used for faster detection if speed is needed and hardware is limited. YOLOv7 should be used in harsher conditions or when the hardware is capable of running it.

Literature

[1] Wang, Chien-Yao and Bochkovskiy, Alexey and Liao, Hong-Yuan Mark, "Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors", 2022 [2] B. Gilles, S. McLaughlin, V. Vyskcil, B. Dillon, T. Rassavong, "Luxonis", DepthAI Hardware [3] Python Software Foundation, PyPI, M. Volkovskiy, "yolov7-package" [4] S. Macenski, T. Foote, B. Gerkey, C. Lalancette, W. Woodall, “Robot Operating System 2: Design, architecture, and uses in the wild”, Science Robotics vol. 7, May 2022.

Other insights